Does anyone know where to start for something that involves all of us? (A bit like the climate emergency?

Many of you may have spotted the headlines about the Prime Minister’s speech on all things AI – and this new ‘Opportunities Action Plan’ (which you can read here) which is also published today. The Government’s response is in the written statement to Parliament by the Business and Trade Secretary here.

This also comes at a time of huge turmoil in the social media sector as everyone tries to figure out how to respond to the US-based tech firms realigning themselves to the new administration there.

There are tensions within the field – not least between those specialising in AI vs the venture capitalist field that seeks high returns from it

As Rachel Coldicutt OBE states in this thread, the thing that links the various components of the Government’s announcement on AI is money. Understandable given the noise buzzing around The Chancellor over troubles in the financial markets (even though arguably these were the result of things happening abroad rather than from within the UK economy – Prof Jonathan Portes advises the Chancellor to stay steady rather than bring in new cuts).

The fingerprints of the Tony Blair Institute for Global Change are all over this announcement

Have a browse through the articles on the subject on the Institute’s website here. The organisation is not a small one – it has 900 people working for it in a range of policy areas as it states on its website.

“Our 2024 Future of Britain programme and conference will look specifically at the Future of Britain: Governing in the Age of AI. The concept of the Reimagined State is a model that any country can adopt, as technology enables governments to deploy resources strategically, driving down costs while improving outcomes.”

Above: Institute for Global Change – Future of Britain

The rising influence of the Institute was something picked up in The Guardian in Sept 2023

“How will The Government manage the risks associated with AI?“

“Government must protect UK citizens from the most significant risks presented by AI and foster public trust in the technology, particularly considering the interests of marginalised groups. That said, we must do this without blocking the path towards AI’s transformative potential.”

Above – GovUK 13 Jan 2025

It’s not clear *how* it intends to do this – nor do we as yet have a clear set of designated risks that need to be addressed by technically-literate policy-makers *who can communicate well with the wider public*.

There are various articles online that try to summarise the lists – in particular by civil liberties campaigners. Take the Berlin-based Liberties EU here. (If any city in the world is going to be red hot on civil liberties given its history over the past century, it’s Berlin).

- Unemployment

- Lack of transparency (in particular with data sets – and identifying poor quality data)

- Biased & discriminatory algorithms

- Profiling (especially without consent)

- Disinformation

- Environmental Impact

- Domination by big tech firms

Above – 7 risks with AI by Liberties.EU

Unemployment as a result of new technologies is something that us older Millennials lived through as we saw the record music shops and photo processors all but disappear from the high streets in our lifetimes – people switching to digital music players and digital photographs, and with the former to streaming.

Educational risks

Interestingly, the one thing that hasn’t been picked up in the news (yet) but is a more than familiar issue in educational circles is the use of AI for homework. It’s one thing older teenagers being caught plagiarising, but in this strange case study that randomly popped up just now, how many parents are familiar with cases of younger children simply dropping really simple questions into programmes and copying and pasting the answers? As a teacher, how do you ensure that the work students and children do is their own? Hence some people making the case (such as in the Cambridge Student Newspaper Varsity on 10 Jan 2025 by Chiraag Shah here) of switching back to hand-written tests and exams.

“Regulatory capture by the Tony Blair Institute?”

That’s my other concern. It doesn’t really matter who is in government, but if too small groups of institutions have such a strong influence over policy – especially a policy that most of the general public is unaware of, that brings a whole host of democratic legitimacy issues. These are being picked up by people working in the fields of using technology to strengthen democracy.

Take Andy Davies of Wholegrain Digital here, where he makes the case for a citizen-first approach rather than an AI-first approach – especially when it comes to the energy consumption of the latter. He and others pointed to the paper from the think tank DEMOS published yesterday on Social Capital 2025: The hidden wealth of nations – co-authored by the former Chief Economist of the Bank of England.

“The language used in the announcement is a master stroke but probably not in the way it was intended. It can’t just be me that thinks comparing a mass AI roll out to intravenous drug use is incredibly insensitive.”

This is a textbook case of why it’s important to have diversity of life experiences in institutions. Someone should have picked up on that in Downing Street’s Comms team and said that was a really poor metaphor. That the PM didn’t pick up on it either is also striking. Furthermore, as Mr Davies says, prioritising energy and fresh water for the maintenance of data centres at the expense of the basic human needs of people is a controversial move to say the least.

“The Big Tech risks – can we simply walk away?”

This is one of the ripostes sometimes thrown at people who find the changing policies of some of the popular social media platforms to be more than alarming. And it has caused some serious soul-searching for a lot of us. I effectively stopped using Birdsite not so long ago – having locked my account and using it as a ‘read only’ function or posting a link to a blogpost. I no longer use it for ‘chat’. Having deleted the apps and shortcuts, I’m using it at only a fraction of the level I had done since late 2010. But as Tamasine McCaig writes here, leaving the platforms won’t actually fix the problem. Furthermore, any technology that becomes mainstreamed into how we live inevitably results in the creation of new jobs and careers involved in the use of those technologies – from the manufacturing of the hardware, to the maintenance and servicing, and ultimately the disposal. Think of the rise and fall of the steam train – and the rise and fall of the working class communities that sustained those industries and allied services in such large numbers. (For example compare the number of workers needed for a steam-and-coal locomotive to function and be serviced, vs an electric locomotive).

That disinformation problem

One of the basics we were taught in both A-level and degree-level economics was that for a perfectly competitive market to function, it needs three things:

- Free and uninhibited movement of capital

- Free and uninhibited movement of labour

- Perfect information

…amongst other things. Such as basic law enforcement systems and functions. (Including but not limited to the enforcement of contract law). There’s an economic history essay for someone to look at how government and institutional policies at an international level have facilitated each of the three, and/or created societal pressures. Is the movement of people across the planet in part following the flows of wealth?

Secondly, given the highly publicised scrapping of fact checkers by one of the big tech firms, how does that ‘perfect information’ assumption fit in with a social media world that’s now full of disinformation? (And state-sponsored through to ‘global celebrity-driven’ disinformation?”

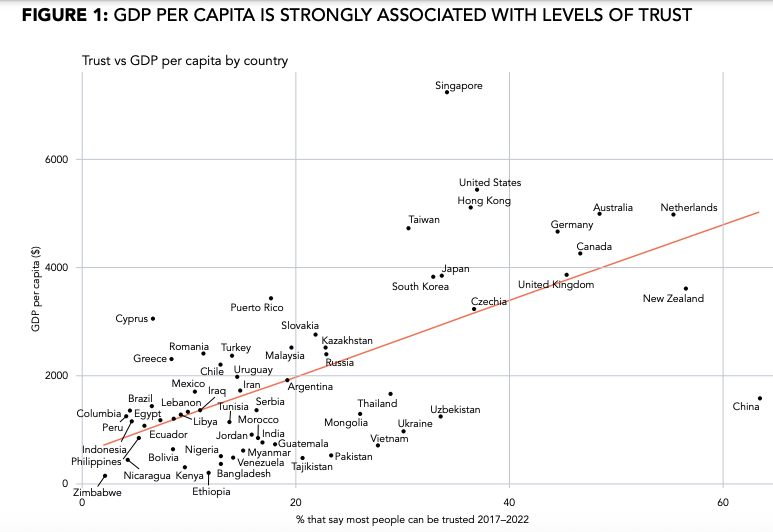

Note that the Social Capital report from Demos published yesterday has the graph below that shows how GDP Per Capital is strongly associated with levels of trust. I imagine you’d find similar with a more complex Human Development Index too – I cannot imagine economies and public services functioning in a society with little trust and high corruption.

Above – Social Capital (2025) Demos p10

“So, what are the solutions?”

Tamasine McCaig lists several here

- Educate for media literacy

- Be active citizens

- Citizens’ Assemblies

- Hold platforms accountable

- Ethical human design

Focusing on 1-3 (because 4 and 5 are effectively the job of a smaller number of institutional actors rather than society-wide), the first three come back to the wider issues around political engagement with the general public.

- I covered active citizenship looking at civil society strategies here

- I noted Cllr Henry T Hall’s comments on informed citizens and literacy from 1800s Lost Cambridge here, including its application to Citizens’ Assemblies.

- I looked at a ‘Citizens’ Curriculum’ here

At the same time we don’t have the networks of adult education and lifelong learning centres that towns and cities used to have. So what is the process of learning and for social adaptation to new technologies? Is there something that we can learn from previous eras such as the rise of computers through to climate change adaptation and mitigation? Or even from previous generations of retrofitting things like piped water and central heating?

Does society have time simply to teach the young ones and let things filter through, or does this generation of decision-makers need to be far more proactive? Because if we are to have some sort of adults’ education programme, ministers need to put rocket boosters under lifelong learning budgets. With a vocational-skills-focused policy, I can’t see that happening – i.e. ministers choosing to fund courses and learning programmes for the civic good rather than for growth only.

Food for thought?

If you are interested in the longer term future of Cambridge, and on what happens at the local democracy meetings where decisions are made, feel free to:

Follow me on BSky <- A critical mass of public policy people seem to have moved here (and we could do with more local Cambridge/Cambs people on there!)

Consider a small donation to help fund my continued research and reporting on local democracy in and around Cambridge.